What is Data Standardization?

It is a preprocessing method used to transform continuous data to make it look normally distributed.

Why?

Scikit-learn models assume normally distributed data. In the case of continuous data, we might risk biasing the models.

Two methods can be used for the standardization process:

- Log Normalization

- Feature Scaling

These methods are applied to continuous numerical data.

When?

- Models are present in linear space. (Ex. KNN, KMeans, etc.),data must also be in linear space.

- Dataset features that have high variance. This could bias a model that assumes it is normally distributed.

- Modeling dataset that has features that are continuous and on different scales.

For example, a dataset that has height and weight as its features needs to be standardized to make sure they are on the same linear scale.

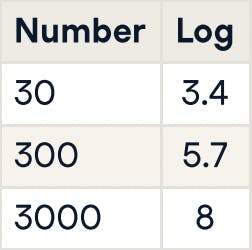

What is Log Normalization?

- Log transformation is applied

- Used in datasets where the variance of a particular column is significantly high as compared to other columns

- Natural log is applied on values

- It is used to captured relative changes, and magnitude of change, and keeps everything in the positive space.

Let's see the implementation.

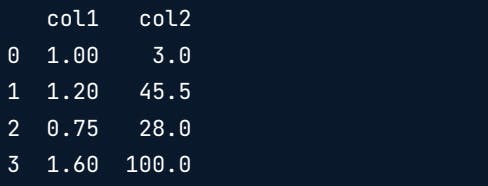

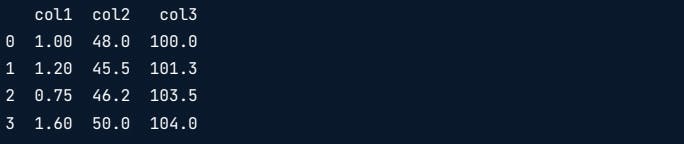

print(df)

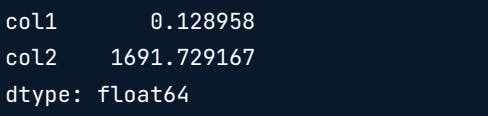

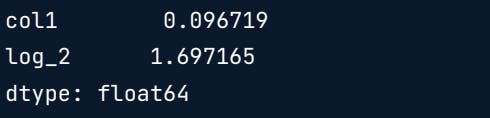

print(df.var())

We will use the log operator from the NumPy library to perform the normalization.

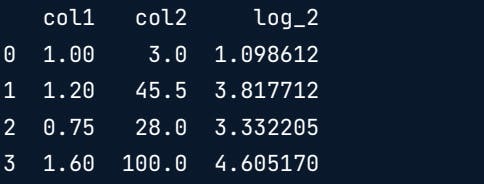

import numpy as np

df["log_2"] = np.log(df["col2"])

print(df)

Let's see values.

Let's see values.

print(np.var(df[["col1","log_2"]]))

What is Feature Scaling?

This method is useful when

- continuous features are present on different scales.

- model is in linear scale.

The transformation on the dataset is done such that the resultant mean is 0 and the variance is 1.

Here across the features, you can see how the variation is.

print(df)

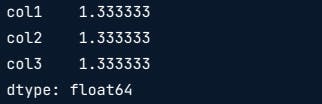

print(df.var())

Using the standardscaler method from sklearn, the process is done.

from sklearn.preprocessing import StandardScaler

scaler = StandardScaler()

df_scaled= pd.DataFrame(scaler.fit_transform(df), columns=df.columns)

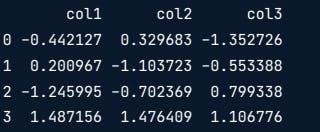

print(df_scaled)

print(df.var())

Check out the exercises linked to this here

Interested in Machine Learning content? Follow me on Twitter and HashNode.